Abstract: This paper analyzes the core technologies of standard video codec standards (such as H.264 and AVS standards) and proposes a general video decoding scheme based on TI's OMAP3530 processor platform. The program makes full use of the hardware structure features of OMAP3530, especially the features of 2D/3D graphics accelerator to improve decoding speed. The experimental results show that the decoding rate of QCIF code stream in AVS format can reach 25 fps, which is suitable for video decoding applications of portable multimedia terminals.

Keywords: OMAP3530; video decoding; hardware acceleration

This article refers to the address: http://

Introduction With the rapid development of multimedia technology and the advent of the 3G era, people are increasingly demanding multimedia, and various organizations have proposed a variety of video algorithms. The International Organization for Standardization has developed many international standards to regulate the development of multimedia technology. In terms of multimedia communication terminal equipment, TI's open multimedia application platform OMAP (Open Multimedia Application Platform) architecture, combined with the control capabilities of the ARM processor and the computing power of the DSP, can achieve more and more than a single DSP can not be completed. Complex services (such as real-time video interaction). In 1998, TI introduced the scalable open 0MAP processor platform, which has introduced processors such as 0MAP310, 0MAP710, OMAPl510, 0MAPl610, OMAP2410 and OMAP2420. The OMAP3 architecture devices (0MAP3503, OMAP3515, OMAP3525, and OMAP3530) introduced in 2008 are composed of the ARM Cortex-A8 core and the DSP TMS320C64X+ core, with more powerful control functions and computing functions. Because the OMAP series processors have always emphasized upward compatibility, the versatility between the series is very strong, the structure changes little, and the program is easy to transplant.

Taking OMAP3530 as an example, this paper analyzes the hardware structure and software programming features of 0MAP platform; summarizes the use skills of IMGLIB provided by TI company, and compares it with OMAPl510; in popular video codec Based on the standard, a general decoding scheme based on OMAP3 video decoder is proposed.

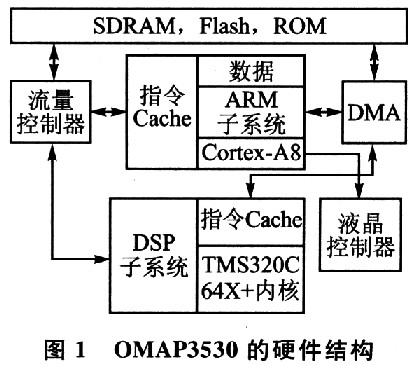

1 OMAP platform introduction Open multimedia application platform OMAP combined with high-performance, low-power DSP core and powerful ARM core control, is an open, programmable architecture, currently mainly OMAP1X, OMAP2X and OMAP3X series . Taking OMAP3530 as an example, the hardware structure is shown in Figure 1.

1.1 OMAP3530 hardware platform The hardware platform of the 0MAP3530 is mainly composed of an ARM core, a DSP core, and a traffic controller (Traffic Controler, TC).

(1) ARM core OMAP3530 uses ARM Cortex-A8 core, working frequency up to 720 MHz. It includes a memory management unit, 16 KB of high-speed instruction buffer memory, 16 KB of data cache and 256K word of secondary cache; on-chip 64 KB of internal SRAM, providing a large amount of data and code for applications such as liquid crystal displays storage. The CortexA8 core features a 13-stage pipelined, 32-bit RISC processor architecture. The control registers in the system access the MMU, Cache, and read and write cache controllers. The ARM core has control over the entire system. It can set the clock and other operating parameters of the DSP, TC, and various peripherals to control the operation stop of the DSP. The OMAP3530 platform supports advanced applications including graphics, multimedia content and Java programs.

(2) DSP core TMS320C64X+ core has the best power performance ratio, working frequency up to 520 MHz; it has high parallelism, 32-bit read and write and powerful EMIF, dual pipeline independent operation and dual MAC B. It uses three key innovations: increased idle power savings, variable length instructions, and expanded parallelism. Its structure is highly optimized for multimedia applications and is suitable for low-power real-time speech image processing. In addition, the TMS320C64X+ core adds a hardware accelerator that cures the algorithm to handle motion estimation, 8×8 DCT/IDCT, and 1/2 pixel interpolation, reducing the power consumption of video processing.

(3) Flow controller The flow controller TC is used to control the access of ARM, DSP, DMA and local bus to all memories (including SRAM, SDRAM, Flash and ROM) in OMAP3530.

OMAP3530 has a wealth of peripheral interfaces, such as LCD controller, memory interface, camera interface, air interface, Bluetooth interface, universal asynchronous transceiver, I2C host interface, pulse width audio generator, serial interface, main client USB port, security Digital multimedia card controller interface, keyboard interface, etc. These rich peripheral interfaces make OMAP-enabled systems more flexible and scalable.

1.2 OMAP3530 Software Platform OMAP can be used to build two operating systems: ARM-based operating systems (such as WinCE, Linux, etc.), and DSP-based DSP/BIOS. The core technology used to connect the two operating systems is the DSP/BIOS bridge. 0MAP supports a variety of real-time multitasking operating systems working on ARM microprocessors for real-time multitasking scheduling management of ARM microprocessors, controlling and communicating TMS320C64X+; and supporting multiple real-time multitasking operating systems in TMS320C64X+ Work on, to achieve complex multimedia signal processing. The DSP/BIOS bridge contains the DSP manager, DSP management server, DSP and peripheral interface link drivers. The DSP/BIOS bridge provides communication management services between applications running on the Cortex-A8 and algorithms running on the TMS320C64X+. Developers can use the application programming interface in the DSP/BIOS bridge to control the execution of real-time tasks in the DSP and exchange task execution results and status messages with the DSP. In this environment, developers can call local DSP gateway components to implement functions such as video, audio, and voice. As a result, developers can develop new applications without having to understand the DSP and DSP/BIOS bridges. Application software developed using standard application programming interfaces is compatible with future wireless devices based on 0MAP.

2 video coding standard and OMAP graphics image library application

2.1 Video coding standards Since 1988, ISO/IEC MPEG and ITU-T have developed a series of international standards for video coding for different applications. MPEG has MPEG-1, MPEG-2, MPEG-4 standards, and ITU-T has H. 261, H. 263, H_263+/H. 263++ and H. 264 standard. In December 2001, ISO and ITU-T formally established the Joint Video Team (JVT) to jointly develop a new H. 264 coding standard. In June 2002, China's Ministry of Information Industry formulated China's Digital Audio Video Coding Standard (AVS). AVS is the second generation source coding standard with independent intellectual property rights in China. Compared with the currently popular standards (such as MPEG-2, MPEG-4, H.263, H.264), MPEG-4 is 1.4 times that of MPEG-2 in terms of coding efficiency, AVS and H. 264 is more than twice that of MPEG-2; from the perspective of algorithm complexity, H. The algorithm of 264 is 4 to 5 times more complicated than MPEG-2 at the encoding end, and 2 to 3 times more complicated at the decoding end, and AVS is more complex than H. 264 has a significant reduction and does not require high patent fees.

At present, in the video coding standard which is widely used, basically, there are the following steps: encoding the image sequence into two modes, an intra mode and a frame mode, and respectively coding. When intra-coding is used, the 8×8 pixel block is directly DCT-transformed, and then the quantized coefficients are variable-length-encoded to form an output code stream; the other path is inverse-quantized and inverse-DC-transformed to form a restored image, which is directly stored in the frame. Memory. When inter-coding is used, motion estimation is performed on each block of the original data, and subtracted from the motion-estimated predicted image to generate a difference image, followed by DCT transform and quantization, and coded together with the motion vector data to form a code. The other stream is inverse quantized and inverse DCT transformed to form a restored image, which is stored in the frame memory for the next motion estimation.

Different standards have their own characteristics, such as MPEGl and H. 261 uses integer pixels, MPEG4 and H. 263 uses half pixels, H. 264 and AVS use motion estimation from 1/4 to 1/8 pixel accuracy, H. 261 uses a single reference frame, H. 264 and AVS use multiple reference frames and the like. Especially the current H. The 264 standard uses advanced techniques such as integer DCT/IDCT, intra prediction, multi-mode motion estimation, and deblocking filter, resulting in great algorithm complexity and high requirements for real-time hardware decoding.

2.2 OMAP Graphics Image Library (IMGLIB) Application For the needs of image and video processing, TI provides the IMGLIB library for C program calls. There are two main parts in the Curry content:

1 hardware acceleration part. Written in assembly language, but the calculation is implemented by the hardware acceleration module and cannot be modified. For example, DCT/IDCT is performed for 8×8 blocks, the transformation matrix is ​​fixed, and there are 16 kinds of hardware acceleration instructions, including 1 DCT/IDCT, 10 motion estimation instructions, and 4 interpolation instructions.

2 software acceleration part. Written in assembly language, including matrix quantization inverse quantization, JPEG variable length coding, one-dimensional / two-dimensional discrete wavelet transform inverse transform and wavelet packet transform inverse transform, and image histogram calculation, edge detection, shift operation 3 × 3 mask operation and the like. These software acceleration instructions provide a standard C interface that can be called directly by the user or compiled to generate your own library files.

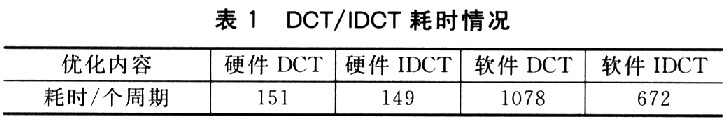

In the process of video encoding and decoding, motion estimation, DCT/IDCT and pixel interpolation occupy a large amount of computing time. The hardware acceleration unit provided by the 0MAP platform can efficiently perform the above operations without occupying the CPU clock (here, no occupation refers to In the operation process, the input and output of the data still takes a small amount of time. At the same time, the optimized software acceleration unit can also complete the operation faster. Taking DCT/IDCT as an example, the time-consuming situation is listed in Table 1.

As can be seen from Table 1, the hardware DCT takes about 1/7 of the software DCT, and the hardware IDCT takes about 1/4.5 of the software IDCT. Therefore, the use of hardware acceleration modules can greatly increase the speed of operation and reduce power consumption.

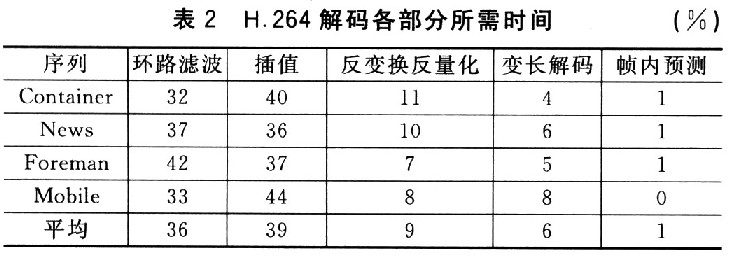

For the latest H. The 264 and AVS standards require the OMAP3530 to take advantage of the hardware acceleration of the 0MAP series. The hardware accelerator of OMAP3530 integrates the half-pixel interpolation of the acceleration module, adopts the integer DCT/IDCT class conversion hardware acceleration module, and integrates the deblocking filter. On a general purpose computer, H. The time required for each part of the decoding process of 264 is as listed in Table 2.

As can be seen from Table 2, in H. In the decoding process of 264, loop filtering, interpolation, and inverse transform inverse quantization occupy more than 70% of the computation time. Therefore, use 0MAP3530 for H. When decoding 264 and AVS, if the hardware acceleration resources of 0MAP3530 can be effectively utilized, the calculation efficiency can be improved and real-time decoding can be realized. In addition, in addition to the hardware accelerator, the architecture of the 0MAP3530 is more suitable for video processing, which is mainly based on the following considerations:

1 There are not many multimedia chips integrated in the market that integrate ARM and DSP. OMAP can use a single chip to realize the functions of embedded operating system (Linux, WinCE, etc.), and can obtain rich information provided by TI's vast third parties. Algorithm support. Operating system-based programming is more flexible and convenient, and it is easy to upgrade the software of the product. In contrast, a single DSP can not achieve the function of the operating system. If the ARM is used to build the operating system, the cost and hardware and software complexity will undoubtedly be greater than that of the OMAP platform.

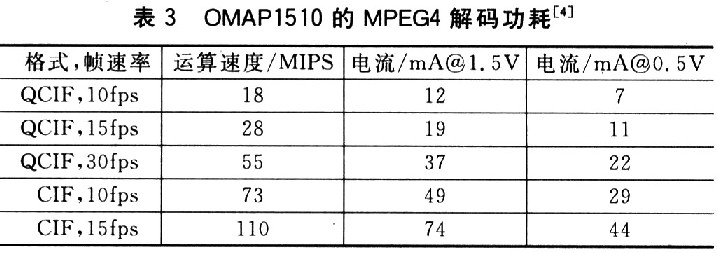

2 power consumption considerations. Table 3 lists the power consumption when running MPEG4 decoding on OMAPl510.

It can be seen that on the OMAPl510 platform, the power consumption is 9.9 to 28.5 mW for QCIF (commonly used standardized image format) and 15 fps applications. For the common 650 mAh mobile phone battery, it can work continuously for 34 to 59 hours, which is obviously enough for general applications. TI's other dedicated multimedia processing chip DM642, which consumes 1.5W, is 50 to 150 times that of OMAP. For portable multimedia terminals, the OMAP platform can meet the needs and save battery power because it does not require too much computing power.

3 speed considerations. The TMS320C64X+ can execute up to 8 instructions in parallel, so the theoretical maximum speed is 4 160 MIPS (520MHz). This is slightly lower than the current fastest multimedia processing chip DM642 (4 800 MIPS, 600 MHz), but the target orientation of the two is different. DM642 is mainly used for real-time encoding and other occasions with high speed requirements, while 0MAP is mainly used for decoding of handheld devices. By H. The Base Profilc of the 264 algorithm is an example, and the complexity is 20% to 30% higher than that of MPEG-4. For MPEG4, 28 MIPS is required at QCIF, 15 fps; corresponding H. The Base Profile of the 264 algorithm requires 40 MIPS of computing speed.

4 program structure considerations. DSP's on-chip memory is the fastest, but very limited, so you must pour off-chip data into memory. Since the current coding methods are all based on macroblocks, each macroblock is at most 16×16, so a more general method is to adopt, and the data to be used by the DMA method is poured into the chip in advance. DMA transfers are fast, so they can be transferred in parallel or serially.

5 software acceleration considerations. The most time-consuming parts can be rewritten in assembly language in the same way as IMGLIB's writing rules, and combined with TI's data sheets for C-level and assembly-level program optimization. Since the compilation efficiency of the TI compiler has been increasing, the C language is recommended from the perspective of generality and readability.

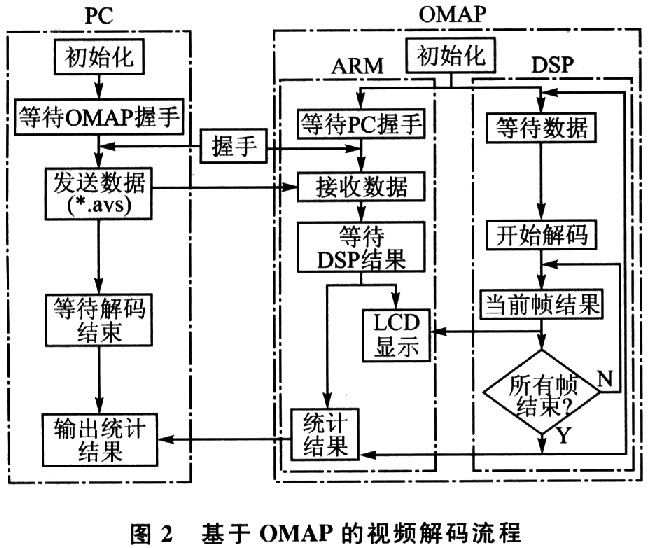

3 Real-time video decoding software implementation on OMAP The development program on OMAP is usually divided into two parts: ARM is responsible for control, display, etc.; DSP is responsible for data processing. CCS, a DSP development tool provided by TI, was developed at each end. The video decoding process is shown in Figure 2.

ARM side: Initialize the entire OMAP3530 chip, including ARM, DSP, TC and other clock settings, DSP turn-on and reset, LCD, timer and other peripheral initialization. After the startup is completed, the ARM core always queries a certain flag bit in the shared memory. When the decoding of one frame is completed, the LCD dedicated DMA is started and displayed on the LCD.

DSP side: Responsible for compression decoding. The compressed code stream is placed in the SDRAM. The main difference from the PC-based decoding program is that since the on-chip memory of the DSP is limited, it is impossible to place the current frame and the reference frame on the chip, so data is transmitted between the SDRAM and the on-chip memory in units of macroblocks. transfer. In addition, since it needs to be converted into RGB images when displayed on the LCD screen, real-time display is realized by YUV to RGB after the end of each frame.

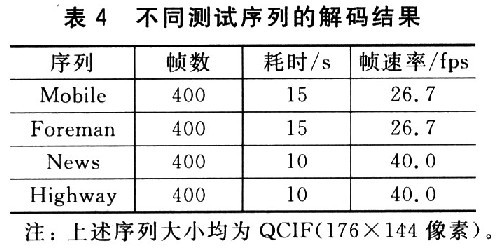

4 Experimental results AVS decoding was implemented on the 0MAP3530 platform. Table 4 shows the experimental data on the OMAP3530.

Conclusion The 0MAP architecture proposed by TI is open, and the programs written under this architecture are easy to transplant and suitable for multimedia platform applications. More and more manufacturers choose OMAP chips as the carrier of mobile multimedia video. The combination of OMAP and popular video standards will also have a good application prospect in mobile communication and multimedia signal processing.

wrist watch accessories,watch parts,custom watch accessories,watch parts customize

Dongguan Yingxin Technology Co., Ltd. , https://www.dgyingxintech.com