If you were in the 1980s and 1990s, you may remember the "computer phobia" that has disappeared.

Until the beginning of the 21st century, I personally experienced that many people will be anxious, fearful, and even aggressive as personal computers appear in our lives, work, and families.

Although some of them are attracted to computers and see the potential of computers, most people don't know about computers. These people are unfamiliar to the computer, confusing, and threatened in many ways. They are afraid to be replaced by technology.

Most people's attitude towards technology, the best situation is resentment, the worst case is panic - perhaps for any change, people's reaction is like this.

It is worth noting that most of what we are worried about is worrying.

Let's hurry up: people who are worried about computers can't live without computers, and they use computers to make money for themselves. The computer did not replace us and did not cause massive unemployment. Instead, now, we can't imagine life without laptops, tablets and smartphones. The worries of the past are moving in the opposite direction, and our concerns have not been realized.

But at the same time, in the 1980s and 1990s, no one warned us that computers and the Internet would pose a certain threat – ubiquitous large-scale surveillance: hackers show up through social networks by tracking network facilities or personal data. Psycho alienation, people's patience and loss of attention, online is susceptible to political thought or religious radicalization, hostile foreign forces hijack social networks to undermine national sovereignty.

If most of the fear becomes irrational, on the contrary, people find that the fears of past technological changes have become a reality.

One hundred years ago, we could not really predict that the transportation and manufacturing technologies being developed would lead to new industrial wars, which killed tens of millions of people in two world wars. We did not realize prematurely that the invention of the radio would lead to a new form of mass communication that would help the rise of fascism in Italy and Germany.

At the same time as the development of theoretical physics in the 1920s and 1930s, there was no corresponding forward-looking report with anxiety about how these theoretical developments can quickly land and develop into nuclear weapons, leaving the world in a state of extinction. Among them. Today, even if the climate is concerned about more than a decade of climate, most Americans (44%) will still choose to ignore.

As a civilization, it seems that it is difficult for us to correctly identify whether their future is good or bad, just as we panic because of irrational fear.

As in the past, we are still in the midst of a new wave of fundamental change: cognitive automation, which can be summarized as "artificial intelligence (AI)." As in the past, we are worried that this new technology will hurt us – artificial intelligence can lead to massive unemployment, or artificial intelligence will have its own institutions, become superhuman, and destroy us.

But if these fears are redundant, just like we used to? What if the real danger of artificial intelligence is very different from the current “super-smart†and “singularity†narratives?

In this article, I want to talk to you about the problems that make me worry about artificial intelligence: the ability of artificial intelligence to operate efficiently and extensibly for human behavior, and the abuse of artificial intelligence by businesses and governments.

Of course, this is not the only tangible risk, there are many, especially those related to the harmful prejudice of the machine learning model; other people's opinions are better than me on this issue.

This paper chooses to cut in from the perspective of large-scale population control because I believe that this risk is urgent and seriously underestimated.

Today, this risk has become a reality, and many technologies will have an increasing trend in the next few decades.

Our lives are becoming more digital, and social media companies are becoming more aware of our lives and ideas. At the same time, they are increasingly using a number of means to control our access to information, especially through algorithms. They see human behavior as an optimizable problem, an artificial intelligence problem: social media companies adjust algorithmic variables to achieve a particular behavior, just as game artificial intelligence improves game strategy. The only bottleneck in this process is the algorithm. These things are happening or coming soon, and the largest social networking companies are currently researching artificial intelligence technology and investing billions of dollars in related projects.

In the following discussion, I will break into the following sections to disassemble and explain in detail the above issues:

Social media is a psychological prison

Content consumption is a psychological control carrier

User behavior is an issue to be optimized

Current situation

The reverse: what artificial intelligence can do for usAnti-social media construction

Conclusion: On the forward crossing

First, social media is a psychological prisonIn the past 20 years, our private and public life has moved to the web. We spend more time staring at the screen every day. Our world is turning to a state in which most of what we do consists of content consumption, modification, or creation.

The opposite of this trend is that companies and governments are collecting our data in large numbers, especially through social networks to understand who we are talking to, what we say, what we are looking at – pictures, movies, music, and news, we are specific The mood of time, and so on. In the end, almost everything related to us will end up being recorded on a server in the form of data.

In theory, these data enable the collecting companies and governments to create very accurate psychological images for individuals and groups. Your opinions and actions can resonate with thousands of “likes†and give you an incredible understanding of what you choose – this may be much better than simply by introspecting.

For example, Facebook's "like" feature is a better way to evaluate a friend's personality. A large amount of data makes it possible to predict when (who) a user has a relationship, when to end a relationship, who has a suicidal tendency, and in the election, who the user will eventually vote for - even then The user is still hesitant.

This is not only an analysis of individuals, but also a better prediction of large teams (with a large amount of data to eliminate randomness and outliers).

Second, content consumption is a psychological control carrierThe data being collected is just the beginning, and social networks are increasingly controlling what we get.

Currently, most of what we see is that the algorithm is working. Algorithms increasingly influence and determine the political articles we read, the movie trailers we see, the people we keep in touch with, and even the feedback we receive about ourselves.

Based on many years of reports, algorithms have had a considerable impact on our lives – for example, who we are and who we will be.

If Facebook can decide which news (or true or false) you will see for a long time, and decide on changes in your political status, you may even decide who will see your news; then Facebook will actually Control your worldview and political beliefs.

Facebook's business is to influence people and provide services to advertisers.

Facebook has built a fine-tuning algorithm engine that not only affects your perception of the brand or the purchase of smart speakers. Adjust the content that this algorithm gives you, influence and control your emotions - this algorithm can even change the election results.

Third, user behavior is an issue to be optimizedSocial networks are able to control what we get while measuring everything we do – and that's all happening.

When we perceive and act, we consider the artificial intelligence problem.

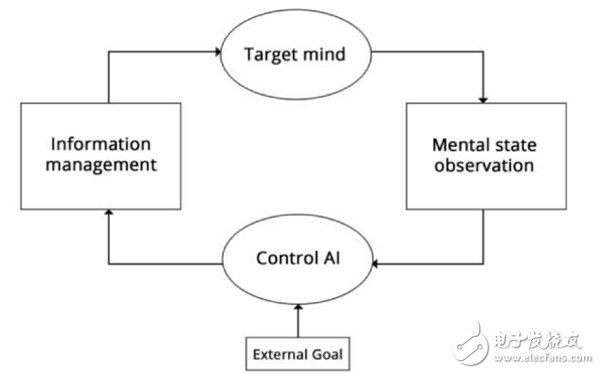

You can build an optimization loop of behavior in which you can observe the current state of the target and continually adjust the information provided until the target. A large subset of the field of artificial intelligence—especially “enhanced learningâ€â€”is about developing algorithms to effectively solve problems that are to be optimized, thereby closing the loop and fully controlling the target. In this case, by moving our lives to the digital realm, we are vulnerable to artificial intelligence.

Human line intensive learning cycle

Human thoughts are easily influenced by simple social patterns and are therefore more vulnerable to attack and control. For example, the following angles:

Identity Enhancement: The skills that have been used since advertising has been working. It links specific content to your markup and can control the content. In social media where AI is involved, the algorithm allows you to see only what you want to see (whether it's news or a blog post from a friend); instead, the algorithm can keep you away from what you don't want to see.

Negative social reinforcement: If your published article expresses an idea that the algorithm does not want you to hold, the system can choose to recommend your article only to those who hold the opposite view (maybe acquaintances, strangers, or even robots). Repeatedly, this may make you far from the initial point of view.

Active social reinforcement: If the point you express in the article is that the algorithm wants to spread, the algorithm will choose to show the article to people who “like†it (or even the robot) – this will strengthen your beliefs and make you feel like you are One of the majority.

Sample Preference: The algorithm also controls the display of your opinion blog posts from your friends (or the mass media). In such an information bubble, you will feel that these views will gain wider support than in reality.

Parameter personalization: The algorithm may also observe people who are similar to your psychological attributes - after all, "people are divided into groups." The algorithm then provides you with content that will be most beneficial to those who have similar perspectives and life experiences. In the long run, the algorithm can even generate the most efficient content that is right for you from scratch.

From an information security perspective, you will call these vulnerabilities - known loopholes in the takeover system. In terms of human thinking, these loopholes will never be repaired. They are just our way of life and exist in our DNA.

Human thinking is a static, vulnerable system that is increasingly controlled by artificial intelligence algorithms that begin to fully control what we get while fully understanding what we do and believe.

Fourth, the current situationIt is worth noting that the application of artificial intelligence algorithms in the process of obtaining information can cause large-scale population control - especially political control - and the artificial intelligence algorithms used in this process are not necessarily very advanced.

You don't need self-awareness, the current technology may be enough, artificial intelligence is such a terrible existence - social networking companies have been conducting research for a long time in this area, and have achieved remarkable results.

While it may be just trying to maximize the “participation†effect and influence your purchasing decisions, rather than manipulating your perception of the world, the tools they developed have been used by the opposition in political events. For example, the 2016 Brexit referendum or the 2016 US presidential election – this is a reality we must face. However, if mass population control is already possible today, then in theory, why is the world not subverted?

In short, I think this is because we are not good at using artificial intelligence; but it also means that there is a lot of potential, and maybe it will change.

Until 2015, all advertising-related algorithms across the industry were run by logistic regression—in fact, even now, to a large extent, only the industry will use more advanced models.

Logistic regression is an algorithm that is earlier than the age of computing. It is one of the most basic techniques for personalized recommendation. This is why so many advertisements are seen on the Internet, and these advertisements have nothing to do with it. Similarly, the social media robots that the opposition used to influence public opinion have almost no artificial intelligence, and their practices are currently very primitive.

Machine learning and artificial intelligence have grown rapidly in recent years, and they have only begun to be applied to algorithms and social media robots; until 2016, deep learning began to be used in news dissemination and advertising networks.

Who knows what will happen next?

Facebook is excited about investing heavily in artificial intelligence research and development and inspirational leadership in the field. When your product is a news source, what is the use of natural language processing and reinforcement learning for you?

We are creating a company that can build a nuanced mental image for nearly 2 billion people, providing a major source of news for many people, running large-scale behavioral manipulation experiments designed to develop the most advanced of the world. Artificial intelligence technology - as far as I am concerned, it scares me.

In my opinion, Facebook may not be the most worrying existence. Many people like to obsess some big companies to rule the world, but these companies have much less power than the government. If we control our thoughts through algorithms, then the government is likely to get worse, not the enterprise.

Now, what can we do? How do we protect ourselves? As a technician, what do we do through social media to avoid large-scale control?

V. The opposite: what artificial intelligence can do for usThe important thing is that even if it exists, it does not mean that all algorithm strategies are bad, or that all advertisements are not good, they all have certain value.

With the rise of the Internet and artificial intelligence, applying algorithms to access information is not only an inevitable trend, but also an ideal trend.

As our lives become more digital and connected, information becomes more and more dense, and artificial intelligence becomes a bridge between us and the world. In the long run, education and self-improvement will be the strongest application scenarios for artificial intelligence - this situation will be realized in dynamic changes, and these changes almost completely reflect the use of malicious artificial intelligence to control your content in the content push. In helping us, algorithms have enormous potential to empower individuals and help society to better manage itself.

The problem is not artificial intelligence, but control.

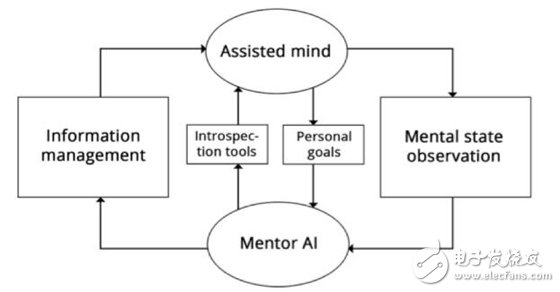

We should not let content push algorithms control users and implicitly achieve hidden goals—such as dictating their political views or continually wasting time—and should let users be responsible for algorithm optimization. After all, what we are talking about is about your own content, your own worldview, your friends, your own life; the impact of technology on you should be naturally controlled by yourself.

Algorithms should not be a mysterious force, relying on this power to achieve goals that are detrimental to their own interests; instead, they should be tools for us to serve our own interests. Such as education, self-marketing, not for entertainment.

I think any product that applies an algorithm should:

Openly and transparently inform the algorithm of the current optimization goals, and how these goals affect the user's information acquisition.

Provide users with simple features to set their goals. For example, users should be able to control in a specific place to maximize their protection and not serve themselves.

Always understand the time users spend on current products in a visible way.

Tools allow you to control how much time users spend on their current products. If you set a daily time goal, the algorithm allows you to achieve self-management through this goal.

Protect yourself with artificial intelligence while avoiding being controlled

We should let artificial intelligence serve humans, rather than manipulating users for profit or for some political purpose.

What if the algorithm does not work like a casino operator or promoter?

Conversely, if the algorithm is more like a mentor or a good librarian, it's a psychological hole for you and millions of people – on this basis, the algorithm recommends a book to you, which will meet your needs. It coincides with each other and they are born together.

This kind of artificial intelligence is a tool that guides your life and can help you find the best path to achieve your goals.

If the algorithm has analyzed millions of people, can you imagine your life in an algorithm-aware world? Or co-author a book with an encyclopedic system? Or do you work with a system that understands all the current knowledge of humans?

In a product that is completely subject to artificial intelligence, more advanced algorithms—rather than threats—will have some positive aspects that allow you to achieve your goals more effectively.

Sixth, the construction of anti-social mediaAll in all, the future of artificial intelligence will become our interface with the world, which is a world of digital information - which will also make it possible for individuals to better manage their lives, and of course it is possible to completely lose this right.

Unfortunately, the current development of social media is somewhat rampant.

However, it is still too early to think that we can reverse the situation.

We need to develop product categories and markets within the industry, where the incentives are consistent with the algorithms that are responsible for influencing users, rather than using artificial intelligence to manipulate users for profit or for some political purpose; we need to work hard to make the opposite A product of social media such as Facebook.

In the distant future, these products may appear in the form of artificial intelligence assistants. Digital instructors help you through the process, and you can control the goals you pursue in your interactions.

At the moment, search engines can be seen as a prototype of artificial intelligence-driven information that serves the user rather than trying to control the user.

A search engine is a tool that is purposely controlled to achieve a specific purpose. You tell the search engine what it should do for you, rather than passively obtaining content; the search engine will minimize the problem from the problem to the answer, from the problem to the solution. Time, not wasting time.

You might think: After all, the search engine is still the artificial intelligence layer between us and the information. Does it have a preference to control us?

Yes, every type of information management algorithm has this risk.

But in stark contrast to social networks: In this case, market incentives are actually consistent with user needs, driving search engines as relevant and objective as possible. If you can't get the most out of it, users will not hesitate to turn to competing products. The important thing is that search engines attack user psychology much less than social content.

The threats in the products described in this article include the following:

Perception and action: Products should not only control what is presented to you (news and social content), but also “perceive†your current state of mind through features like “likesâ€, chat messages, and status updates. Without awareness and action, learning cannot be strengthened. As the saying goes, "If you want to do something good, you must first sharpen your tools."

The center of life: Products should be the main source of information for some users, and typical users spend hours on the product every day. Supportive and specialized products or services (such as Amazon's item recommendations) will not pose a serious threat.

Social component: Activating broader and more effective psychological control factors (especially social reinforcement). Inhuman content only accounts for a small part of our thinking.

Business Incentives: The goal is to manipulate users and allow users to spend more time on the product.

Most artificial intelligence-driven products do not meet these requirements.

On the other hand, social networking is a combination of risk factors that have terrible power.

As technologists, we should be inclined to products that do not have these features, reject products that combine these features, and they are potentially dangerous due to misuse.

Build search engines and digital assistants instead of social news, which will make recommendations transparent, configurable and constructive, rather than crappy machines, maximizing "participation" and wasting your time.

Focus on interface and interaction, as well as related expertise such as artificial intelligence, to build an excellent setup interface for the algorithm, allowing users to use the product according to their own goals.

The important thing is that we should inform and educate users about these issues so that they can have enough information to decide whether or not to use a product that has the potential to control themselves – that would generate enough market pressure to trigger technological change.

7. Conclusion: On the way forwardSocial media is not only sufficient to understand the powerful mental models established by individuals and organizations, but is also increasingly controlling our access to information. It can manipulate what we believe, what we feel, and what we do through a series of effective psychological attacks.

A sufficiently advanced artificial intelligence algorithm that can effectively control our beliefs and behaviors through our perception of our mental state and our actions governed by mental state.

Using artificial intelligence as the information interface itself is not a problem. Such a human-computer interaction interface, if well designed, may bring great benefits and empowerment to all of us. The key is that users should have complete control over the algorithm as a tool for pursuing their goals (in the same way that they use search engines).

As a technical expert, we have a responsibility to reject products that are out of control and to build an information interface that is accountable to our users. Don't use AI as a tool to control users; instead, let your users use AI as a tool to serve them.

One road makes me feel scared, the other way makes me hopeful – there is enough time for us to do better.

If you are using these technologies, please keep this in mind:

You may not be malicious, you may not care; you may be more likely to assess the development of restricted stock than to share your future. But whether you care about it or not, because you have the infrastructure of the digital world, your choices will affect others, and you may end up being responsible for them.

Consumer Electronic Cable Assembly

Consumer electronic Cable Assembly: The product is mainly used in the signal, power, power transmission machine control inside the home appliance.

Common consumer electronic cable assemblies include air conditioner power wiring harnesses, water dispenser wiring harnesses, computer internal power cords, coffee machines, egg beaters and other signal lines, TV wiring harnesses and other product wiring harnesses that we can call white goods. The wiring harness is the main body of the circuit network. There is no home appliance circuit without a home Wire Harness . At present, whether it is a high-end luxury home appliance or an economical ordinary home wire harness, the braided form is basically the same, which is composed of wires, connectors and wrapping tapes. Home appliance wires, also known as low-voltage wires, are different from ordinary household wires. Ordinary household wires are copper single-core wires with a certain hardness. The consumer electronic wire harnesses are all copper multi-core soft wires, some soft wires are as thin as a few hairs or even dozens of soft copper wires are wrapped in a plastic insulating tube (polyvinyl chloride), which is soft and not easy to break. Common specifications of wires in consumer electronics harnesses include wires with a nominal cross-sectional area of 0.5, 0.75, 1.0, 1.5, 2.0, 2.5, 4.0, 6.0, etc. square millimeters, each of which has an allowable load current value for use in different power electrical equipment. Different gauge wires.

The consumer electronics connection cable harness industry has had a profound impact on people's lives, cutting into all aspects of life from market conditions, industry services, service conditions, and market scale. Therefore, Kable-X has made an in-depth understanding of the consumer electronics industry to provide customers with better wiring harness.

In addition to Instrumentation Cable Assembly, we also have Vehicle cable assembly and Industrial Cable Assembly.

Consumer Electronic Cable Assembly,Power Cord Cable Assembly,Power Cord Cable For Sale,Cable Assembly Prototyping

Kable-X Technology (Suzhou) Co., Ltd , https://www.kable-x-tech.com