2016 is the year of the rise of artificial intelligence. With the popularity of artificial intelligence applications such as face recognition and driver assistance, many Internet (Google, Facebook, Baidu, etc.) and semiconductor giants (Nvidia, Qualcomm, Intel, etc.) have been artificially The layout of the smart field is exerted. Naturally, the hottest spot in the 2017 CES show is of course artificial intelligence. When various types of artificial intelligence applications emerge in an endless stream, we should also note that it is precisely the semiconductor manufacturers that stand behind these applications and provide application running platforms at low prices to accelerate the popularization of artificial intelligence. Today, let's take a look at the performance of major semiconductor manufacturers at CES.

QualcommQualcomm is undoubtedly the leader of mobile chips. The product released by Qualcomm at CES is mainly the Snapdragon 835 SoC. The Snapdragon 835 SoC is manufactured on Samsung's 10nm process and features an 8-core Kyro 280 processor (including 4 high-performance cores with peak frequencies up to 2.45GHz and 4 low-power cores with peak frequencies up to 1.9GHz) .

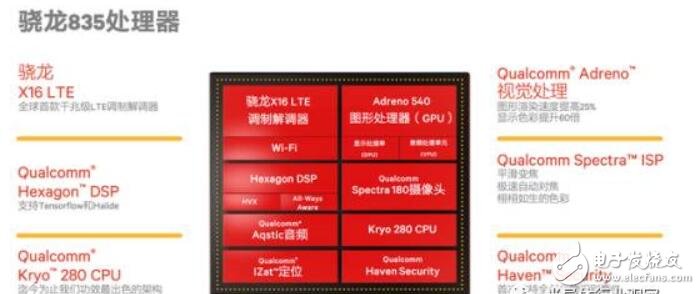

In addition to the processor, the Snapdragon 835 also includes core modules such as X16 LTE Modem, Adreno GPU, Hexagon DSP, Spectra ISP and so on. Among them, the most closely related to artificial intelligence is the Hexagon DSP and Adreno GPU.

At present, there are more and more deep learning applications on the mobile side. For example, the recent style of the style migration app (the style of turning one picture into another).

In the deep learning application of the mobile terminal, the accuracy of the calculation is often not high, and the calculation of the fixed point number can also meet the needs of most applications. The Hexagon DSP in the Snapdragon series can perform fixed-point operations efficiently. However, in previous versions, DSP was not friendly for deep learning applications. It is not easy for developers to call DSP to complete fixed-point operations in deep learning. . In response to this, Qualcomm is gradually strengthening DSP support for deep learning applications. In the Snapdragon 835, the Hexagon DSP with Hexagon Vector Extension (HVX) features is further enhanced, including better support for custom neural network layers.

In addition, the Adreno GPU is a module that can implement SIMD (Single Instruction Stream Multiple Data Stream) high efficiency parallel computing in the Snapdragon SoC. The SIMD feature of the GPU makes it possible to efficiently perform deep learning calculations, but it needs to provide a complete programming interface for deep learning developers. Nvidia's programming interface is CUDA, and its ease of use has become one of the preferred development languages ​​for deep learning developers. In non-Nvidia GPUs, the CUDA-like interface is OpenCL. The Adreno GPU in the Snapdragon 835 is a perfect support for OpenCL 2.0, which is good news for developers who want to use the GPU in Qualcomm SoC for deep learning.

Finally, Qualcomm upgraded the software framework that included the upgraded neural processing engine, which added support for Google TensorFlow and optimized power and performance for the Snapdragon heterogeneous core. This further enables deep learning developers to more efficiently leverage the computing power of Qualcomm Snapdragon SoCs to accomplish different applications.

Review

As a semiconductor giant that started in the communications industry, Qualcomm still uses wireless communication as its main strategic focus. Its layout in autonomous driving is mainly in the Internet of Vehicles rather than artificial intelligence. However, as artificial intelligence gradually moved closer to the mobile (embedded) end, Qualcomm gradually increased its support for deep learning in the Snapdragon SoC. The support for deep learning in the Snapdragon SoC is mainly reflected in the instruction level and application framework, which allows developers to more efficiently use existing SoC resources to complete deep learning calculations. Qualcomm's dedicated deep learning hardware (such as the accelerator module) has not been followed by the experimental Zerot. It is obvious that Qualcomm's investment in deep learning is conservative, which also gives other vendors who focus on embedded deep learning hardware a chance to surpass. .

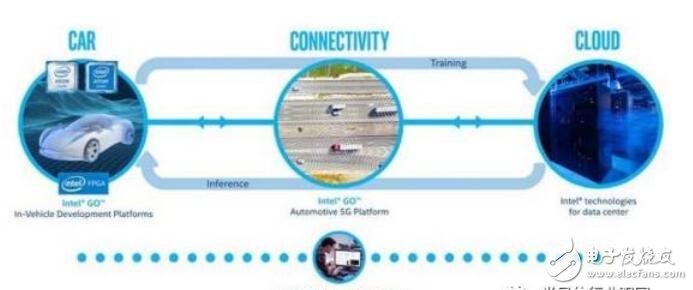

2.IntelThe previous Intel has missed the opportunity of mobile devices, this time Intel is not willing to miss artificial intelligence again. At this year's CES, Intel released the GO platform, showing its determination in the field of autonomous driving. The GO platform includes car networking, in-car computing and cloud computing services. In-car computing, Intel will use Atom and Xeon CPUs that meet automotive electronics standards, along with Altera's latest FPGA technology. In the cloud, Intel GO will provide a wide range of technologies including high-performance Intel Xeon processors, Intel Arria 10 FPGAs, SSDs and Intel Nervana platforms to build a powerful host and deep learning training and simulation infrastructure to meet the autonomous driving industry. demand. In addition, Intel also released an SDK for the GO platform, allowing developers to take full advantage of the computing power of the GO platform.

Driverlessness is one of the important areas of Intel's focus. At the end of 2016, Intel separated the auto team from the IoT business unit and established the autopilot division separately. More importantly, Intel reached an alliance with BMW and Mobileye last year and plans to launch a driverless car by 2021. In this alliance, the BMW Group will be responsible for driving control and power components, as well as evaluating overall functional safety, including setting up high-performance simulation engines, integrating whole components, and producing prototypes. The Intel GO platform provides a scalable development and computing platform for a variety of key functions, including sensor fusion, driving strategy, environmental modeling, path planning, and decision making. Mobileye contributes its EyeQ 5 patented high-performance computer vision processor, providing automotive-grade functional safety and low power performance. EyeQ 5 handles and translates 360-degree landscape visual sensors and regional data. Mobileye will further cooperate with the BMW Group to develop a sensor fusion solution that integrates the data collected by vision, radar and optical sensors to create a complete model for the surrounding environment of the vehicle, combined with its artificial intelligence algorithm to make the car Safe to cope with all kinds of complex driving situations.

At this year's CES, the BMW, Intel and Mobileye alliance announced at the pre-show press that about 40 BMW driverless cars will begin road testing in the second half of 2017. This is an important step for the three companies to work together to achieve the goal of unmanned driving. The three companies disclosed that these BMW 7 Series vehicles will use the technology of Intel and Mobileye to start a global road test journey from the US and Europe.

Review

Intel's investment in artificial intelligence is currently mainly in the cloud, and its acquisition of Altera FPGAs and Nervana is already providing powerful performance for Intel's cloud-based artificial intelligence business. In terms of driverlessness, Intel and BMW, Mobileye form their own alliances, Intel is responsible for computing platforms, Mobileye is responsible for algorithms, environment awareness and big data collection, while BMW is responsible for cars. Intel is still very low-key in the field of mobile artificial intelligence, but the acquisition of Movidius last year made us believe that Intel is actively deploying in this aspect, and there will be more in the near future.

3.Nvidia

With the limelight of artificial intelligence, Nvidia's share price has more than tripled last year, which is amazing. This year, CES invited Nvidia's Huang Renxun as the guest of the most important special speech on the opening night. There is no doubt that Nvidia has become the focus of this year's CES. At this year's CES show, two of Nvidia's products were related to artificial intelligence, one for Shield+Spot for the home Internet of Things and the other for Xavier, a vehicle-based artificial intelligence platform.

First let's take a look at Shield+Spot. Shield is the game console that Nvidia released a few years ago, and this time the latest version of Shield was released at CES. In addition to the regular game and Internet video playback features, the new Shield has the biggest highlight is the introduction of Google Assistant. The Google Assistant can complete various instructions of the user through speech recognition. For example, in the demo video of the CES presentation, the user has a voice conversation with the Google assistant to let the Shield play the video, display the photo, and the like.

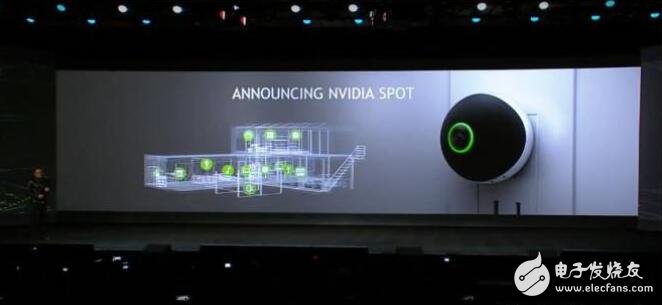

However, Nvidia's ambitions for Shield are far more than "smart set-top boxes that support voice interaction," but smart home centers. In order for the Shield to receive voice commands from anywhere in the home, Huang Renxun also released the Nvidia Spot for use with the new Shield while releasing the new Shield. The Nvidia Spot is a specially designed microphone that can be placed anywhere in the home and connected to the Shield via a LAN to transfer user voice commands to the Shield.

At the same time, in Nvidia's plan, Shield can control far more than the TV, but can control a variety of smart appliances (such as Nest products). In this way, in the smart home solution provided by Nvidia, Nvidia Spot is the recipient of user instructions all over the home. The voice commands from anywhere in the user are transmitted back to the IoT central node Shield through Spot, and the Shield is based on the instructions. Control home smart home appliances, such as turning on air conditioners, starting sweeping robots, and more.

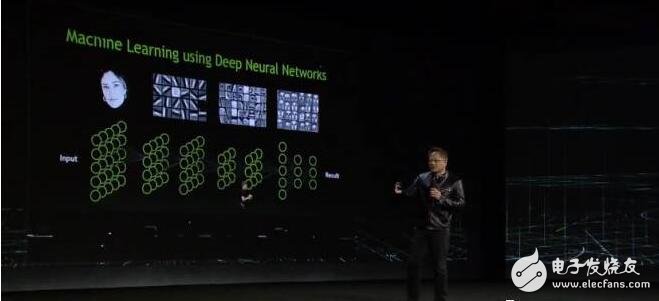

The second highlight of the Nvidia conference was Xavier. It is a car supercomputer module that Nvidia expects to be officially released in 2017. Xavier includes a Volta GPU with 512 CUDA cores and an 8-core Nvidia custom ARM64 CPU. What is most shocking to the industry is its performance: in the case of peak performance of 30TOPS, only consume 30W! In other words, its energy efficiency has reached 1TOPS/W. In contrast, in 2017, the first paper of the ISSCC Deep Learning Processor Session, the top conference in the semiconductor field, was released. ST's top-level deep learning ASIC has only achieved 2.9TOPS/W. ST's deep learning accelerator is designed for deep learning. In general, it can only be used for deep learning. Xavier is a general-purpose computing platform. The performance of 1TOPS/W can be calculated in addition to deep learning. Generality is much better than ASIC. Usually the efficiency of a dedicated ASIC should be an order of magnitude better than that of a general-purpose computing platform, and now the gap is reduced to less than three times, showing the power of Xavier. In the case of a small difference in performance, most people will choose a general-purpose computing platform instead of an ASIC. Therefore, engineers who believe in the development of deep learning accelerator ASICs see that the Xavier indicator is really stressful.

Another interesting detail is that Xavier's performance is not based on the conventional GPU's FLOPS (floating point per second), but OPS (fixed-point operations per second). In the usual GPU, deep learning calculations are usually done with floating-point arithmetic. This method saves computational accuracy while losing computational speed. Therefore, a very popular direction of deep learning hardware is how to use fixed-point arithmetic instead. The floating-point number operation greatly increases the speed while ensuring that the calculation accuracy loss is controllable. Nvidia has done some support for fixed-point calculations on Pascal GPUs, and according to the clues revealed by CES's keynote speech, the next-generation Volta GPUs will definitely support the fixed-point operations. As the absolute ruler of deep learning hardware, Nvidia's strong support for fixed-point arithmetic will force deep learning algorithm developers to strengthen the development of deep learning frameworks using fixed-point numbers. In the foreseeable future, deep learning networks using fixed-point numbers will become more and more popular.

Xavier's performance and power consumption are perfectly matched to the needs of the unmanned market, and driverlessness is the top priority of Nvidia's artificial intelligence market. Huang Renxun mentioned that the current transportation market can reach one trillion US dollars. There are one billion cars running on the road in the world, and the automobile transportation market is a market with severe losses. The main reason is that human drivers are easy to make mistakes. Once the driver makes a mistake, the damage caused by the car accident is very large. If artificial intelligence is used to help drive, these losses can be greatly reduced.

Nvidia also released the unmanned and co-pilot application with Xavier. In terms of driverlessness, Nvidia has released the BB2 driverless car. The BB2 can now automatically change lanes according to road conditions, slow down turns, avoid pedestrians and so on. Nvidia is working with Audi and expects to achieve Level 4 driverlessness by 2020 (ie, an autonomous driving system that requires manual intervention in rare cases).

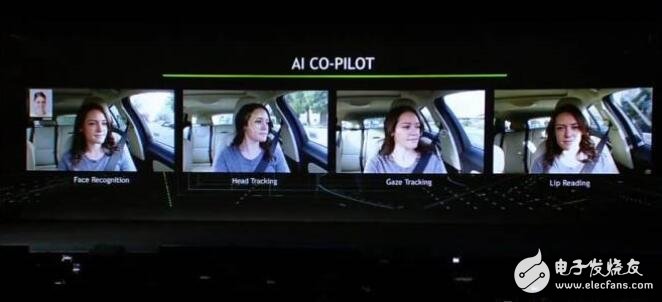

In terms of collaborative driving, Nvidia has released four technologies, including face recognition, head tracking, line-of-sight tracking, and lip reading technology. Facial recognition can first judge the driver's expression through deep learning to further judge the driver's emotional condition, and remind the driver to adjust the emotion even if he or she finds emotional instability to avoid impulsive driving. Head tracking and gaze tracking can help the co-pilot system determine whether the driver's attention is concentrated and promptly alert when the driver is distracted. The lip reading technology can judge and execute the voice command issued by the driver's lips in a relatively noisy environment. According to Huang Renxun's explanation, Nvidia is working with the LipNet team at the University of Oxford in the UK to develop a deep learning network model for lip reading. The model is now 93.4% accurate and is expected to be used in real cars soon. Finally, the cooperative driving system can also score the driving behavior, and can also be the basis for the insurance company to set the premium while urging the driver to drive safely.

Review

Interestingly, at CES, Nvidia did not release GPUs like AMD, but released the application platform directly. It can be seen that Nvidia's role positioning has gradually shifted from the original semiconductor manufacturer to the artificial intelligence platform provider. In addition, if Qualcomm's chips are mainly located on the mobile side, Intel's chips mainly serve the cloud server side, then Nvidia's products are between Qualcomm and Intel, serving the car and the home.

In a data center with huge data volumes, Nvidia's GPU is an integral part of the server, but Nvidia's own server is still in the test phase, so Nvidia in the big data artificial intelligence market provides hardware rather than platform. At the other extreme, in the embedded deep learning field where the amount of data is not large and the computing power is not high but the power consumption is extremely limited, Nvidia's GPU-based artificial intelligence platform consumes too much power. The high computing power, on the other hand, leads to high costs and therefore cannot compete with ASICs such as Qualcomm's SoCs. In the ADAS and home appliance markets, Nvidia's artificial intelligence platform is perfectly suited to both computing power (10-100TOPS) and power consumption (10-100W), so Nvidia's main autopilot and home IoT center is not surprising.

4. Chinese manufacturersAt this CES, the artificial intelligence related products exhibited by Chinese manufacturers are mainly developed by using chips from foreign semiconductor manufacturers, such as the vision-based driver assistance (ADAS) technology developed by Intel and the UAVs in Dajiang.

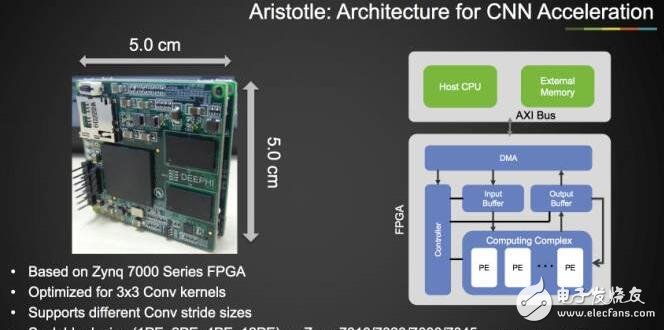

At present, China is not behind the international advanced level in artificial intelligence chips. The Cambrian technology of the Chinese Academy of Sciences has been highly recognized by the international academic community and the industry, and ranked first in the 2016 ISCA (International Computer Architecture Conference). The series of chips based on its technology development has been verified by tape and is currently actively entering the commercial track. Shenjian Technology focuses on neural network compression, and its Deep Learning Processor Architecture (DPU) is also internationally recognized. In the second half of last year, Aristole (for convolutional neural networks) and Descartes (for speech recognition) were released at HotChips. Accelerator.

Cambrian chip

Deep evaluation released DPU architecture

In addition, Huawei, Dajiang and other companies are also actively developing hardware for artificial intelligence. The artificial intelligence-specific hardware is at a similar starting line for domestic and foreign companies, and domestic manufacturers are less burdensome than foreign semiconductor giants, so domestic manufacturers have the opportunity to surpass foreign giants. However, domestic artificial intelligence chip manufacturers cannot only develop deep learning accelerators, but must provide a complete solution in order to be competitive. Chinese semiconductor manufacturers still have a long way to go in this regard.

DIN Electric Bell

The electric bell is suitable for audible signaling for intermittent use only in domestic and commercial installations.We are manufacturer of Low Voltage Electrical in China, if you want to buy Electric Bell,Patent Intermittent Electric Bell, please contact us.

Korlen electrical appliances also produces other low voltage electric appliances, for example, overload protector which can do over-current & short circuit protection. The overload protector is popular at customers.

Low Voltage Electrical,Electric Bell,Patent Intermittent Electric Bell

Wenzhou Korlen Electric Appliances Co., Ltd. , https://www.zjmoldedcasecircuitbreaker.com