Over the past few years, the concept of virtualization—the separation of software from the hardware that hosts it—has become known to many. In this article, we will describe what virtualization is, see how it works in a PowerVR GPU, and explain how it brings huge benefits to various markets, especially the automotive industry.

For computers, current virtualization technology allows a computer to run multiple operating systems at the same time. For example, a developer can run a Linux “guest†operating system on a Microsoft Windows host, and for businesses, it’s usually Used to consolidate workloads to reduce CapEx and OpEx. On the embedded platform, the main purpose of the virtualization technology is to ensure the security through separation while reducing costs.

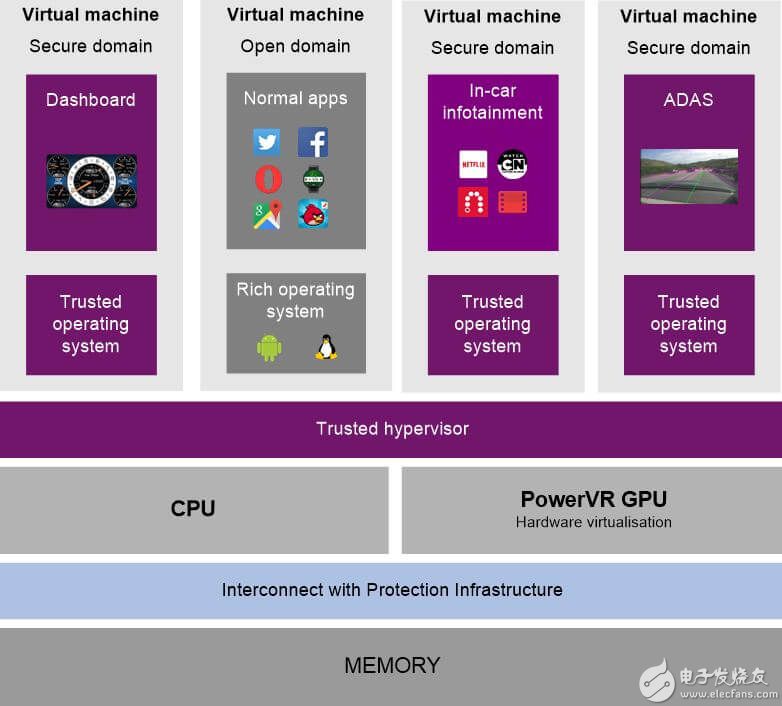

When it comes to GPUs, virtualization provides the ability to support multiple operating systems running at the same time. Each operating system can submit graphics workloads to the underlying graphics hardware entity. This is becoming more and more important in the automotive field. For example, by placing some of the most demanding systems such as the ADAS (Advanced Driver Assistance System) and digital display panels in a completely separate domain to ensure their safe and independent operation.

To break it, the virtualized GPU needs to perform the following operations:

• Hypervisor: This basically provides the guest operating system with a shared virtual hardware platform (in this case, GPU hardware) and manages the software entities hosted by the guest operating system.

• Host OS—Compared with guest operating systems, the host operating system has a complete driver and more advanced control over the underlying hardware.

• Guest OS: A virtual machine with an operating system hosted by a hypervisor, one or more, that can share the underlying hardware resources available.

Hardware Virtualization vs Paravirtualization

Since the Series6 GPU core, PowerVR has advanced, complete hardware virtualization features and has been further enhanced in Series8XT, and we will discuss this in detail later in this article. With full virtualization, this means that every guest operating system running under the hypervisor will not realize that it is sharing GPU resources with other guest operating systems and host operating systems. Each guest system has a complete driver and can submit tasks directly to the underlying hardware in an independent and concurrent manner. The advantage of this approach is that there is no overhead of managing programs when committing different “guest†tasks, which also reduces the waiting time for submitting tasks to the GPU and therefore has a higher utilization rate.

This is different from the paravirtualization solution in which the guest operating system can realize that they are virtualized and share the underlying hardware resources with other guest systems. In this case, the guest system needs to submit the task through the hypervisor. The entire system must work together as a cohesive unit. The disadvantage of this solution is that the overhead of the hypervisor (running on the CPU) is too high and the task submission delay is too long, which potentially reduces the effective utilization of the underlying GPU hardware. In addition, guest operating systems need to be modified (additional functions added) so that they can communicate through the hypervisor.

GPU virtualization use case:

There are many GPU virtualization application cases. The following are listed mainly in the embedded market:

• Automotive • Digital TV (DTV)/Set Top Box (STB)

• Internet of Things (IoT)/wearable devices • Smartphones/tablets

The virtualization we discuss in this article is mainly focused on the automotive side because in this market, virtualization will bring a lot of benefits. It has specific requirements, which makes it one of the more complex markets. For this content you can refer to our white paper.

Why does the automotive market need virtualization?

GPU virtualization is becoming an essential requirement for the automotive industry. Most first-tier agents and OEMs choose to add more ADAS features, and multiple high-resolution displays have become more common in newer cars.

As the car becomes more and more automated, the functionality of ADAS increases. These functions are computationally complex, and now the GPU's powerful parallel computing capabilities allow them to handle these tasks very well. At the same time, higher-resolution displays on dashboard clusters and infotainment equipment (at the dashboard and rear seats) and on windshields are becoming a trend.

For the automotive industry

So why is the PowerVR virtualization feature so suitable for cars? Essentially, this is because it provides a series of features that address a variety of issues, such as hardware robustness to achieve maximum security and quality of service to ensure continuous performance while ensuring maximum hardware utilization of the hardware.

isolation

Let us first look at the basic isolation method. This is the isolation between different operating systems (OS) and their corresponding applications. These applications provide security by separating applications. This is of course the basic benefits of virtualization. one.

The following video demonstrates this feature. The video shows an operating system, a display showing key information such as speed, warning lights, etc., and a navigation application next to it. This is a less important operating system. First the satellite navigation application crashes (artificial), followed by a "kernel crash" and then a full reboot. The key point we have to pay attention to is that this does not affect the dashboard display application running on other operating systems; it continues to work uninterruptedly, and also note that once the operating system is restarted, it can be seamless again. Submit the task to the GPU.

Service Quality: Guaranteed Performance Level

One of the key requirements of the automotive industry is to require one or more critical applications/operating systems to have sufficient resources to provide the required performance. On PowerVR, this is achieved through a priority mechanism. A dedicated microcontroller (MCU) in the GPU processes the schedule and sets the priority for each operating system (if required, it can also set the application in each operating system. / Workload priority). When the workload of a higher priority operating system is submitted to the GPU, the workload of the lower priority operating system is switched out of the context.

In simple terms, "context switching" is where the current operation pauses at the earliest possible point in time, and the required data is saved for use at a later point in time when the operation resumes.

The earliest possible use time for the Series6XT (first-generation PowerVR GPU supporting full hardware virtualization) platform used in this demonstration is:

• Geometry processing: call drawing granularity • Pixel processing: patch granularity • Calculation processing: workgroup granularity

Once the higher-priority operating system's work is completed, lower-priority workloads are recovered and completed. This feature helps ensure that critical, higher-priority workloads receive the required GPU resources to ensure Required performance requirements.

The following video demonstrates this, in the video shipped on the GPU

Go Further: Series 8XT Enhanced Virtualization

The first hardware virtualization support fully the PowerVR series is Series6XT, above all video / presentation use are Series 6XT platform. In this section, we will discuss how to further enhance the Series8XT, and provide some new features and enhancements.

Context switching granularity

On the Series8XT, medium context switching can be performed at a finer granularity to ensure faster context switching. Switching between lower priority workloads and higher priority workloads, context switch granularity is currently mainly :

• Vertex processing: Raw granularity • Pixel processing: Minor tiling or worst case back to tile size

Termination of each data master

In situations where a lower-priority application does not switch contexts within a defined time frame, then a DoS mechanism needs to be used to terminate an integration or software reset of the application based on the data master (calculation, vertex or pixel processing). Previous generations only supported computational termination, and vertex and pixel processing required a soft reset, so if they were to run in unsafe, low-priority applications, it would affect high-priority workloads. In Series8XT, all data masters can now be terminated, ensuring that even high-priority/critical workloads will not be affected when they overlap with applications that need to end.

Submit control for each SPU workload

Thanks to this feature, a specific application can execute its workload for its own Scalable Processing Unit (SPU) in the GPU. For example, in ADAS applications that are based on long-running calculations in cars, you can run your own dedicated SPUs in your application, while other applications, possibly from other operating systems, use another mechanism (such as Higher-priority tasks based on context switching) share the remaining GPU resources.

Tightly integrated secondary MMU

The previous-generation GPUs used a one-level MMU, requiring SoC vendors to design and implement secondary/system-level MMUs or similar mechanisms to support virtualization. Now that the Series8XT has integrated a secondary MMU on the GPU, this brings the following benefits:

• Optimized design and tightly coupled to the first-level MMU for low latency and increased efficiency • Reduced the difficulty of SoC vendor development and faster time-to-market • Set up individual software for available entities in management applications • Support full / Two-way coherent support to improve performance and reduce system bandwidth • In essence, higher levels of protection and smaller fine-grained (page-bound, computer-specific terms) security support can be provided in a virtualized environment.

to sum up

The hardware virtualization technology of PowerVR GPU integration is very effective, and it is very suitable and meets many needs of the automotive industry. Our newest Series8XT GPUs have proven this, further enhancing its capabilities to help enable next-generation in-vehicle infotainment and autonomous driving that is safe and cost-effective.

The stadium LED screen is ideal for places of with huge crowd. Its high resolution picture and wide viewing angle ensure best quality pictures and videos to every corner of the stadium. Stable body protects the display from any damage, even high speed ball.

The LED Display is manufactured by using best quality material, purchased from most reputed vendors. The final products feature supreme quality and offers best outputs. High refresh rate and gray scale ensures the picture quality remains seamless.

The display features a wide viewing angle of 120° horizontal and 120° in vertical which reaches more viewers. The picture quality remains seamless in all direction and at a distance, presenting all viewers the same highly quality outputs. This makes it suitable for large gathering. -

Stadium LED Display,Football Led Display,Staduim Led Display,Outdoor Football Led Display

Guangzhou Chengwen Photoelectric Technology co.,ltd , https://www.cwstagelight.com