Abstract: Aiming at the driver safety problem in traffic field, a design method of driver emotion perception system based on S5PV210 chip is proposed. The S5PV210 chip processor is used. The linux2.6.30 kernel is loaded, the driver's face image is obtained by the USB industrial camera, and the facial expression feature value is obtained by using a sparse representation based expression feature numerical classification method. Finally, the driving is obtained through mapping. The emotional state of the staff. The warning is given when the preset situation occurs, and the real-time data is sent back to the management center server through the WIFI communication network. Simulation experiments show that the current correct recognition rate of the driver's emotion can reach more than 80%.

This article refers to the address: http://

In order to improve the efficiency of the logistics industry, it is necessary to judge and adjust the driver's mood, and always let the driver be in an efficient emotional state. Driver bad emotions often lead to catastrophic accidents in the field of road transportation. When the driver is in a bad emotional state, the strain rate becomes slower, the speed of processing information is also reduced, and the quality of decision-making is also affected. It has a great relationship with safe driving. This paper proposes a driver emotion sensing system based on S5PV210 chip. The driver's face image is obtained by USB industrial camera. The facial expression feature value is obtained by using a sparse representation based expression feature numerical classification method. The map obtains the driver's emotional state result.

1 system overall principle and hardware design

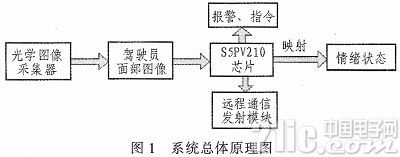

The overall principle of the system is shown in Figure 1. The high-end ARM Cortex-A8 S5PV210 processor from Samsung is used as the main control chip. The driver's face image is obtained by the optical image collector disposed in the front section of the driver, and the S5PV210 chip processor located at the front end performs arithmetic processing on the image, and uses a sparse representation-based expression feature numerical classification method to identify the driver's The real-time expression maps the emotional state of the driver at this time according to the expression. Through the computer vision method, through the optical image collector arranged in the front section of the driver, the driver's facial features are monitored in real time, and warnings are given when a preset situation occurs, for example, the system determines that the driver is tired or angry. When the driver's working state is determined to be unfavorable to the driving state, an alarm is given, and the real-time data is sent back to the management center server through the WIFI communication network, and the management center analyzes and processes all the driver data, and provides decision data to the manager. Or provide advice to the manager according to the set rules. After the decision, the management command is issued to the driver, so that the driver is always in an efficient working state.

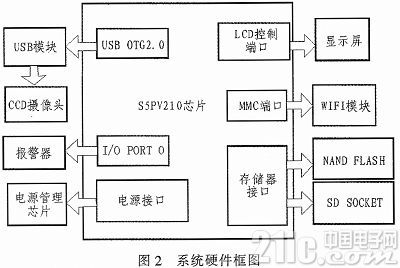

The hardware components of the driver's emotion perception system based on the S5PV210 chip mainly include resources such as LCD, WIFI, USB, camera, SD card, power supply, etc., as shown in FIG. 2 . The S5PV210 is connected to the display and power modules through the I2C bus. The I/O ports of the S5PV210 are connected to the alarms. The memory ports are connected to DDR and NAND respectively. The XMMC ports are connected to the SD card, the MMC ports are connected to the WIFI module, and the LCD port is connected. Connect to the LCD and connect the USB port to the camera.

2 system identification algorithm

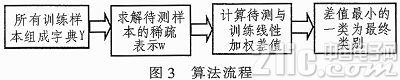

A numerical representation method of facial expression features based on sparse representation is used to obtain the facial expression features of the driver. The basic idea of ​​the driver facial expression recognition algorithm based on sparse representation is that all the training set images and the images to be tested are first subjected to pre-processing operations to extract features, and the labeled training sets of each category are obtained, and then based on The classification algorithm of the sparse representation identifies the category to which the test image belongs. The specific steps are organized as follows:

The algorithm flow is shown in Figure 3.

3 Simulation experiment

The TQ210 development board of Tiancai Computer Technology Co., Ltd. was used as the experimental platform, which uses the Samsung Cortex-A8 S5PV210 chip. Two verification methods are used: the Person-dependent verification method and the Person-independent verification method to test the expression recognition rate. Person-dependent verification means that the training set and the test set allow the appearance of the same person's expression image. Therefore, this verification method is easier and the result is better. Person-independent verification means that the training set does not overlap with the characters in the test set, which is actually more difficult to identify, but this verification method is closer and practical. The experiments were performed separately in JAFFE (The Japanese Fem ale Facial Expression) facial expression database and AR face database. The literature proposes the Facial Expression Coding System (FACS), which uses 44 motion units to describe facial expression changes and defines six basic emotional categories: sadness, fear, disgust, anger, happiness, surprise, and the six types of expressions in this article. include.

3.1 JAFFE Expression Library

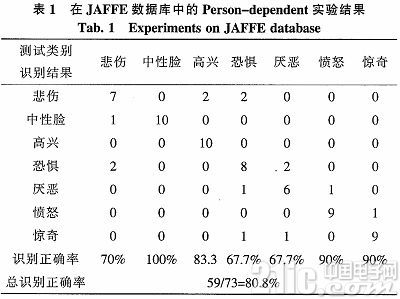

The JAFFE Japan database contains 213 (resolution of each image: 256 pixels x 256 pixels) facial expression images of Japanese women, each of which has an original expression definition. There are 10 people in the expression library. Each person has 7 expressions, namely, neutral, happy, sad, amazed, angry, disgusted, fear, 2 to 3 images of each expression. Table 1 is the experimental results of Person-dependent in the JAFFE library.

It can be seen from the experimental results that the recognition accuracy of the four expressions of neutral, happy, angry and amazed has reached more than 80%. The overall recognition accuracy rate has also reached 80%.

3.2 AR library

The AR Face Database was created by the Computer Vision Center in Barcelona, ​​Spain. It contains more than 4,000 color face images of 126 volunteers (70 males and 56 females) with a resolution of 256 pixels per image. X256 pixels. The database captures different facial expressions of different volunteers under different lighting conditions and frontal faces of different occluders (sunglasses and scarves). The text selected 120 individuals from neutral, happy, angry, and surprised four expression images. In the Person-dependent verification, all 480 expressions were used as the training set. The test set selected 23 people's neutral. Happy, angry, and surprised 40 expressions for the test set; 100 in Person-independent verification.

The human expression is the training set, and the expressions of the remaining 20 people are test sets. The experimental results are shown in Table 2.

It can be seen from the experimental results that the correct rate of recognition in Person-dependent verification is 75.25%, which is satisfactory, but in the Person-independent verification, the recognition accuracy is less than 50%, and the method needs to be improved.

4 Conclusion

The system adopts S5PV210 chip processor. Loads Linux2.6.30 kernel and uses sparse representation expression classification method to realize computer automatic recognition and result transmission of driver's emotional state, so that the management center can grasp the driver's emotional state and give improvement. Measures reduce the probability of traffic accidents.

Solar Lawn Lamps,Best Solar Lawn Lights,Solar Powered Lawn Lights,Outdoor Solar Lawn Lights

Jiangmen Liangtu Photoelectric Technology Co., Ltd. , https://www.liangtulight.com