A Generative Adversarial Network (GAN) is a neural network that learns to select samples from a specific distribution ("generate") through competition ("confrontation"). GAN is composed of a generative network and a discriminant network, and is trained through the confrontation between the generative network and the discriminant network. Therefore, to understand GAN, it is not only necessary to understand the two parts of the generative network and the discriminant network, but also to understand how the two networks influence each other during the training process. However, understanding the iterative, dynamic, and complex interaction of these two networks during the training process is very challenging for beginners, and sometimes even experts do not fully understand the entire process.

To this end, Minsuk Kahng, Polo Chau, and Google Brain’s Nikhil Thorat, Fernanda Viégas, and Martin Wattenberg from Georgia Institute of Technology jointly developed GAN Lab. This interactive visualization tool can help understand the internal mechanism of GAN.

GAN Lab

If you don't gossip, let's start to experience GAN Lab with Lun Zhijun now.

First, visit https://poloclub.github.io/ganlab/, wait a moment for the page to load. Then select the data sample in the upper left corner. GAN is often used to generate images, but it is troublesome to visualize high-dimensional data such as images. In order to show the data distribution as clearly as possible, GAN Lab uses two-dimensional data points (x, y).

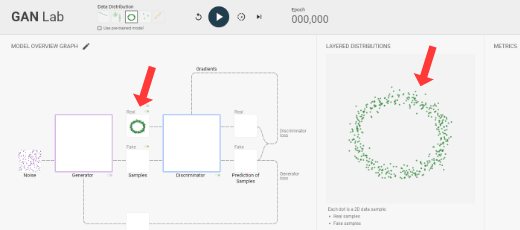

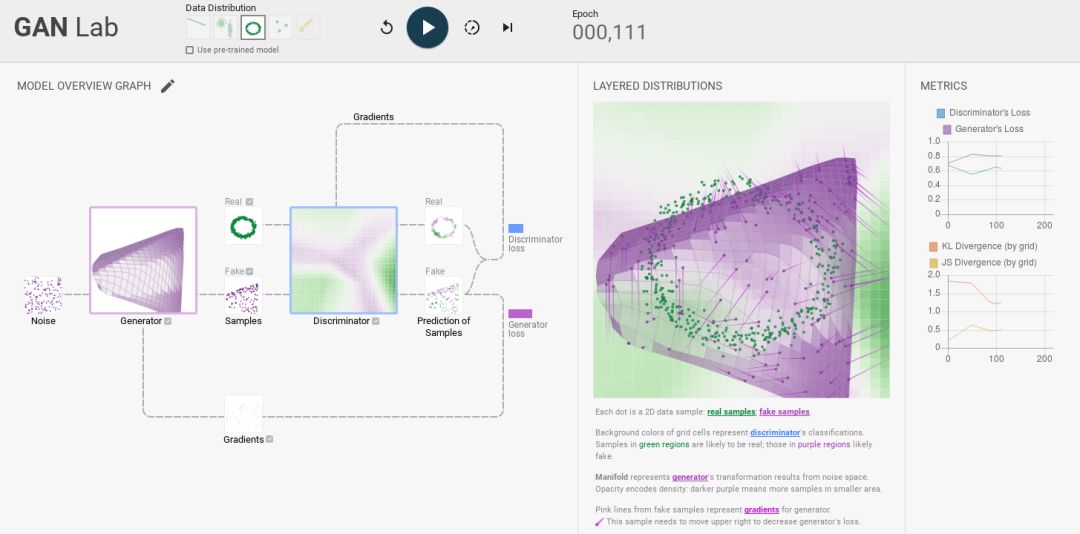

As shown in the figure, the model overview on the left contains a small picture of the data distribution, and the right shows a large picture of the data distribution.

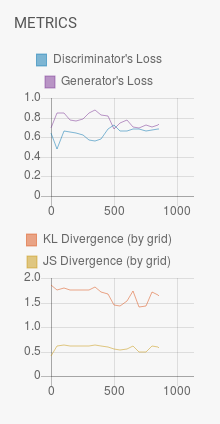

Click the run button to start training, and we can see the constantly updated measurement on the far right. The top is the loss of the generation network and the discrimination network, and the bottom is the KL divergence and GL divergence.

In the data distribution view on the right, in addition to the original green dots (real samples), we can also see some purple dots (generated samples). During the training process, the position of the generated sample is continuously updated, and eventually tends to overlap with the distribution of the real sample. GAN Lab uses green and purple instead of the usual green and red because it does not want people to associate the generated sample as a negative situation.

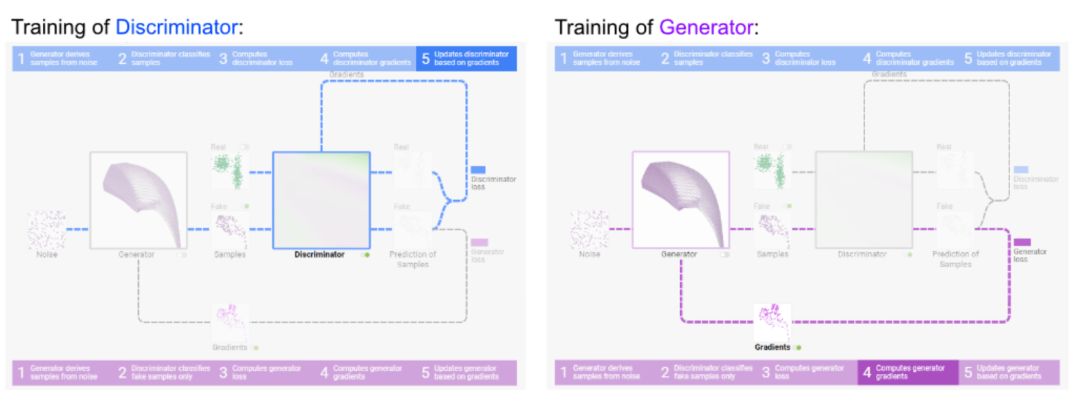

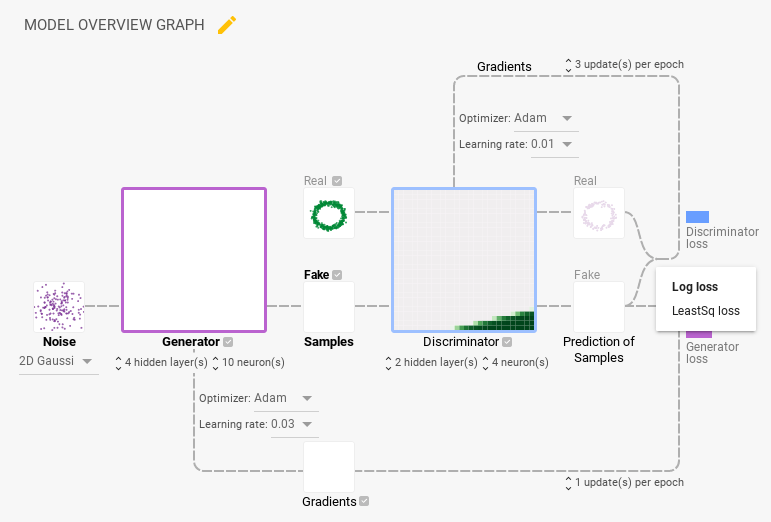

Then on the left is the GAN model architecture. During the training process, the dotted line will indicate the direction of data flow. There is a slow motion button next to the run button, which can slow down the training process and view the flow of data more clearly and carefully.

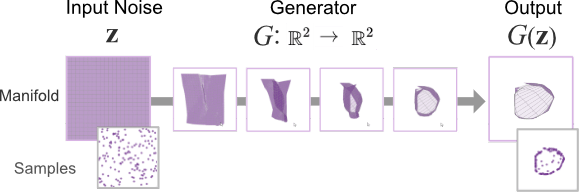

Hovering the mouse over the generator network, you can see the manifold transformation process from random noise to generated samples. Transparency encodes density, that is, the higher the transparency, the smaller the space where the data points are generated. In addition, after checking the small box under Generator, the manifold that generates data points will also be displayed in the data distribution view on the right.

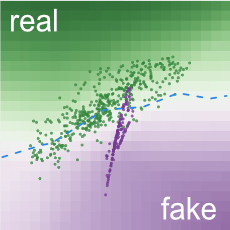

On the Discriminator, the decision boundary is visualized as a two-dimensional heat map. Green indicates that the discriminant network is classified as a real sample, and purple indicates that the discriminant network is classified as a generated sample. The color depth encodes the degree of confidence, that is, the darker the color, the more confident the discriminant network is in its own judgment. As the training progresses, the overall heat map of the discriminative network tends to gray, which means that it is increasingly difficult for the discriminant network to distinguish between real samples and fake samples. In addition, to discriminate the predictions output by the network, the confidence of color shades is also used. Similarly, after checking the small box under Discriminator, the data distribution view on the right will also display the heat map.

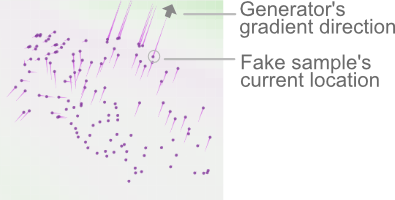

Finally, the data distribution view on the right visualizes the gradient of the generated network with purple lines. During training, the gradient stabs into the green area of ​​the background heat map, which means that the generating network is trying to trick the discriminating network.

The following picture shows the overall effect after 111 epochs:

Interactivity

Next to the slow motion mode button, there is a step button, one click to train an epoch. Moreover, you can even choose to train only the generative network or the discriminant network.

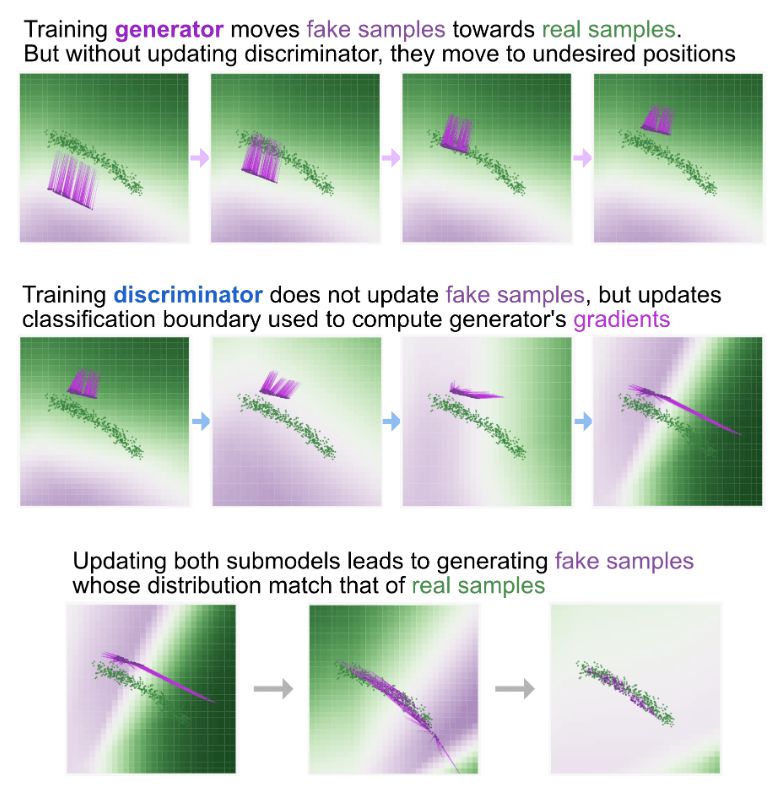

Top: Only train the generative network; Middle: Train only the discriminant network; Bottom: Synchronous training

Click the pencil icon on the model overview to modify the hyperparameters of the model, including random noise distribution (uniform, Gaussian), the number of hidden layers of the generated network and the discriminant network, the number of neurons in each layer, the optimization method, the learning rate, and the loss function.

After Zhijun randomly adjusted the parameters, the model collapsed

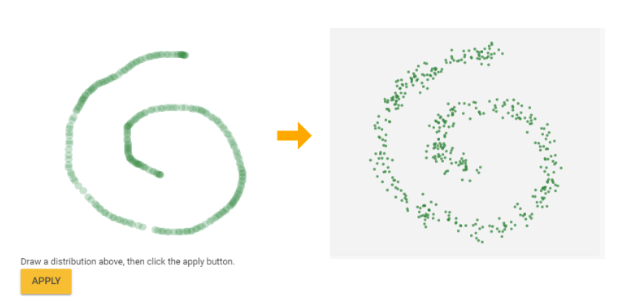

In addition to modifying the model hyperparameters, you can also set the distribution yourself. Click the pencil icon in the data distribution area in the upper left corner to draw a new distribution by yourself.

Implementation technology

GAN Lab is implemented based on TensorFlow.js (one of the authors Nikhil Thorat is the leading developer of TensorFlow.js), and the training of the entire GAN network is done in the browser!

The author has open sourced the code, just enter the following commands to run GAN Lab on his own machine:

git clone https://github.com/poloclub/ganlab.git

cd ganlab

yarn prep

./scripts/watch-demo

>> Waitingfor initial compile...

>> 3462522 bytes written to demo/bundle.js (2.17 seconds) at 00:00:00

>> Starting up http-server, serving ./

>> Available on:

>> http://127.0.0.1:8080

>> Hit CTRL-C to stop the server

There are many variants of GAN network. If you want to visualize your favorite GAN variants, welcome to participate in the development of GAN Lab.

Plug-In Connecting Terminals,Insulated Spade Terminals,Cable Connector Double Spade Terminals,Vinyl-Insulated Locking Spade Terminals

Taixing Longyi Terminals Co.,Ltd. , https://www.longyiterminals.com